ChatGPT and other generative AI tools can be powerful for businesses. They help teams work faster, automate tasks, and improve productivity. But without the right policies in place, these tools can expose sensitive data and introduce unnecessary risk.

A recent KPMG study found that only 5% of U.S. executives have a mature AI governance program. Another 49% plan to build one, but haven’t started yet. This means most organizations are using AI without clear rules to protect themselves.

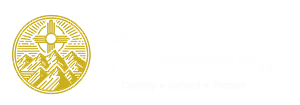

If you want to use AI in a safe, secure, and responsible way, this guide outlines the five essential rules your organization needs to follow.

Why Businesses Are Turning to Generative AI

Generative AI tools like ChatGPT and DALL-E help teams:

-

Create content faster

-

Summarize large documents

-

Draft reports

-

Support customer service

-

Improve workflows

The National Institute of Standards and Technology (NIST) notes that AI can improve decision-making and boost productivity across many industries. But these benefits only matter when AI is used safely and responsibly.

5 Essential Rules to Govern ChatGPT and Generative AI

These rules help you protect sensitive data, stay compliant, and gain real value from AI tools.

Rule 1: Set Clear Boundaries Before Using AI

Start with clear rules about how AI can and cannot be used. Without guidance, employees may enter sensitive or confidential data into public tools.

Your AI policy should include:

-

Approved use cases

-

Tasks that AI cannot be used for

-

Who owns AI oversight

-

How often guidelines are reviewed

Clear rules help your teams use AI with confidence while reducing risk.

Rule 2: Always Keep a Human in the Loop

AI can sound accurate even when it’s wrong. That’s why human review is essential.

AI should support your team—not replace them. Humans must review AI-generated:

-

Client communications

-

Public content

-

Reports

-

Documents used for decisions

The U.S. Copyright Office also states that purely AI-generated content cannot be copyrighted, which means your business may not legally own it. Human input ensures originality and ownership.

Rule 3: Keep AI Use Transparent and Logged

Transparency builds trust and protects your organization. You should always know:

-

Who is using AI

-

What tools they are using

-

What prompts they enter

-

What data they handle

Logging prompts and model details creates a record that supports compliance reviews and helps you analyze how AI is being used across your business.

Rule 4: Protect Data and Intellectual Property

Every prompt sent to a public AI tool is a form of data sharing. If that prompt includes client details, financial information, or internal documents, you may breach privacy laws or contracts.

Your AI policy should clearly state:

-

What data is allowed in AI tools

-

What data is prohibited

-

How employees should handle sensitive information

No confidential or client-specific information should ever be entered into public AI tools. This step alone protects your business from major risks.

Rule 5: Make AI Governance an Ongoing Process

AI changes fast. Your policy must change with it.

Plan to:

-

Review your AI policy every quarter

-

Train employees regularly

-

Update guidelines when new risks appear

-

Reevaluate tools as technology evolves

Ongoing governance keeps your business safe as AI capabilities grow.

Why AI Governance Matters More Than Ever

Using these five rules creates a strong foundation for responsible AI use. Good governance:

-

Reduces the chance of data exposure

-

Improves client trust

-

Keeps your business compliant

-

Supports safe and meaningful innovation

-

Strengthens your brand reputation

Clear AI policies help your teams work faster and smarter—without compromising security.

Turn Responsible AI Use into a Business Advantage

Generative AI can transform your operations, but only if it’s used safely. With a strong AI governance framework, you can reduce risk, support innovation, and protect your organization.

If you want help building an AI Policy Playbook for your business, we’re here to support you with clear guidance and practical tools.

Contact us to start building a safer, smarter AI program today.